- EverythingDevOps

- Posts

- Most AI Code Review Tools Fail

Most AI Code Review Tools Fail

Benchmarking the top 7 AI code review tools

Hey there,

AI tools are getting better at writing code, but reviewing code properly still remains a huge challenge.

Often, the comments look helpful at first glance, but you quickly notice they either missed the issues that matter or add noise you still have to sort through.

The real blocker in AI code review

People often assume review quality comes down to the model itself. In practice, the bigger factor is context..

Most tools only look at the diff or a few nearby files. They don’t pull in the dependencies, type definitions, caller chains, test files, or related history that a human reviewer would consider.

Without that context, reviews either overlook critical problems or overcompensate with low-value suggestions.

Context is King!

Was this email forwarded to you? Subscribe here to get your weekly updates directly into your inbox.

How code review quality is measured

So how do you actually judge whether an AI code review is “good”?

Using golden comments: ground-truth issues that a competent human reviewer would be expected to catch. These are real correctness, security, or architectural problems – not style nits or personal preferences.

Every comment from a tool is then classified as:

True positives: it matches a golden comment

False positives: it’s incorrect, irrelevant, or just noise

False negatives: golden comments the tool completely missed

From there, compute three key metrics:

Precision – How trustworthy is this tool?

Of all the comments it made, what percentage were actually correct?Recall – How comprehensive is it?

Of all the real issues (golden comments), how many did it find?F-score – Overall quality

A combined score that balances both precision and recall.

High precision keeps developers engaged.

High recall is what makes a tool genuinely useful.

Only systems with strong context retrieval can achieve both.

Benchmark results from 7 AI code review tools

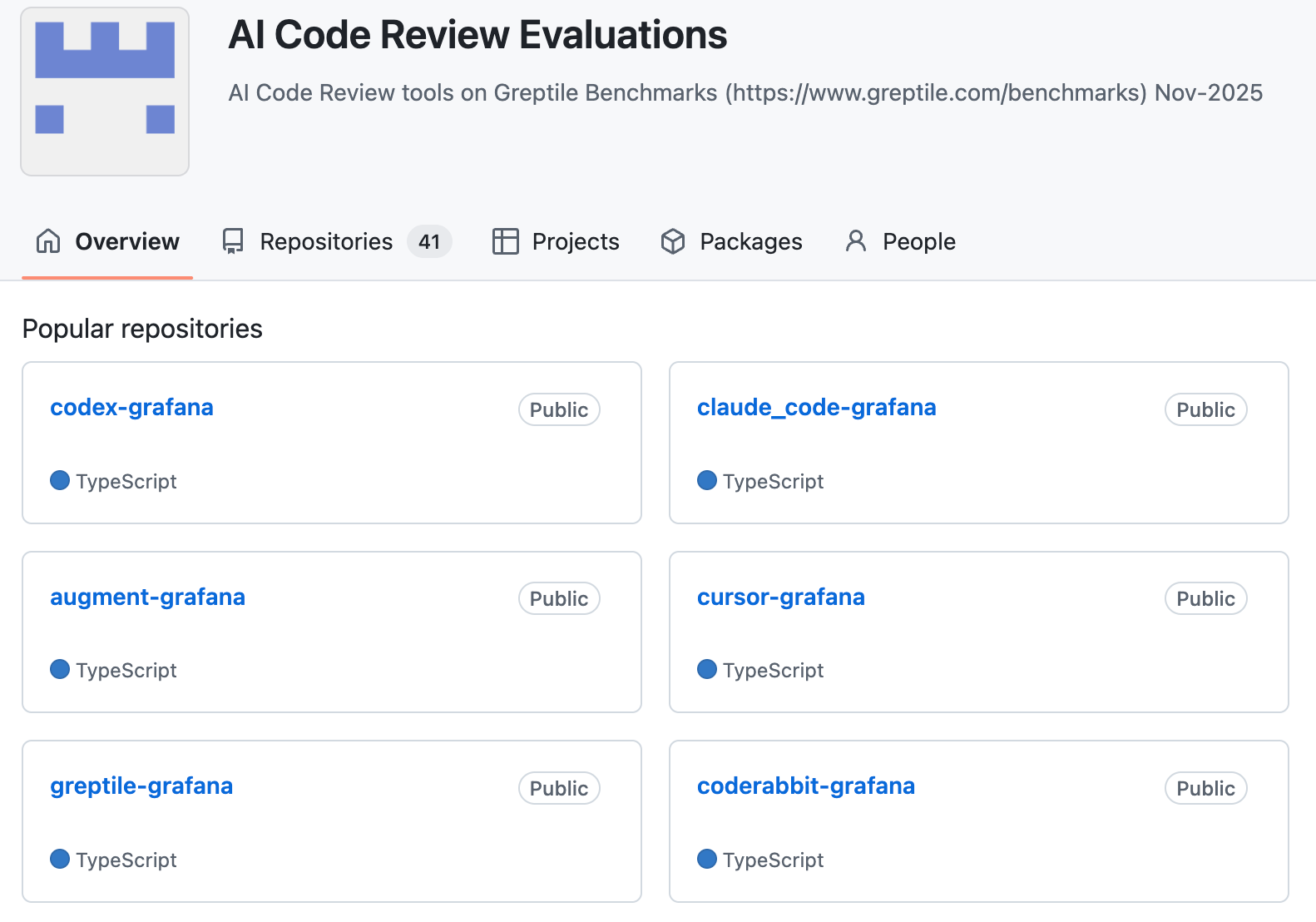

Measuring reviews from 7 most used AI code review tools on a public dataset of real pull requests from popular open source projects.

Each pull request includes verified ground truth issues, so the tools could be measured on how many real problems they caught and how much noise they added.

One tool comes out on top: Augment Code Review.

Augment Code Review scored highest on overall quality (F-score).

It achieved both high precision and high recall, which is rare for AI code review tools because most tools only achieve one: precision, and struggle with recall due to limited reachability analysis.

Was this email forwarded to you? Subscribe here to get your weekly updates directly into your inbox.

What really makes Augment different?

Three things separated Augment from the rest:

A superior context engine: Augment reliably pulled in the right dependency chains, call sites, type definitions, tests, and related modules—so it could reason like a real human reviewer, not just comment on the diff.

A reasoning-driven agent loop: Instead of a single-pass prompt, Augment iterates: it traverses the repo graph, follows references, and only decides once it has enough information to judge correctness.

Purpose-built code review tuning: It’s tuned to suppress lint-level clutter and focus on real correctness and architectural issues, avoiding the spammy behaviour common in other tools.

Put together, these three ingredients drove Augment’s highest precision, highest recall, and best overall F-score in the evaluation.

How it fits into your workflows

Augment Code Review provides a native GitHub experience for reviewing pull requests. You can configure it to run automatically on every PR or only when you request a review.

Try it out now and let me know what you think!

And it’s a wrap!

See you Friday for the week’s news, upcoming events, and opportunities.

If you found this helpful, share this link with a colleague or fellow DevOps engineer.

Divine Odazie

Founder of EverythingDevOps

Got a sec?

Just two questions. Honest feedback helps us improve. No names, no pressure.