- EverythingDevOps

- Posts

- 💀My Backup Betrayed Me. Never Again.

💀My Backup Betrayed Me. Never Again.

Technical Notes From Jubril

Better prompts. Better AI output.

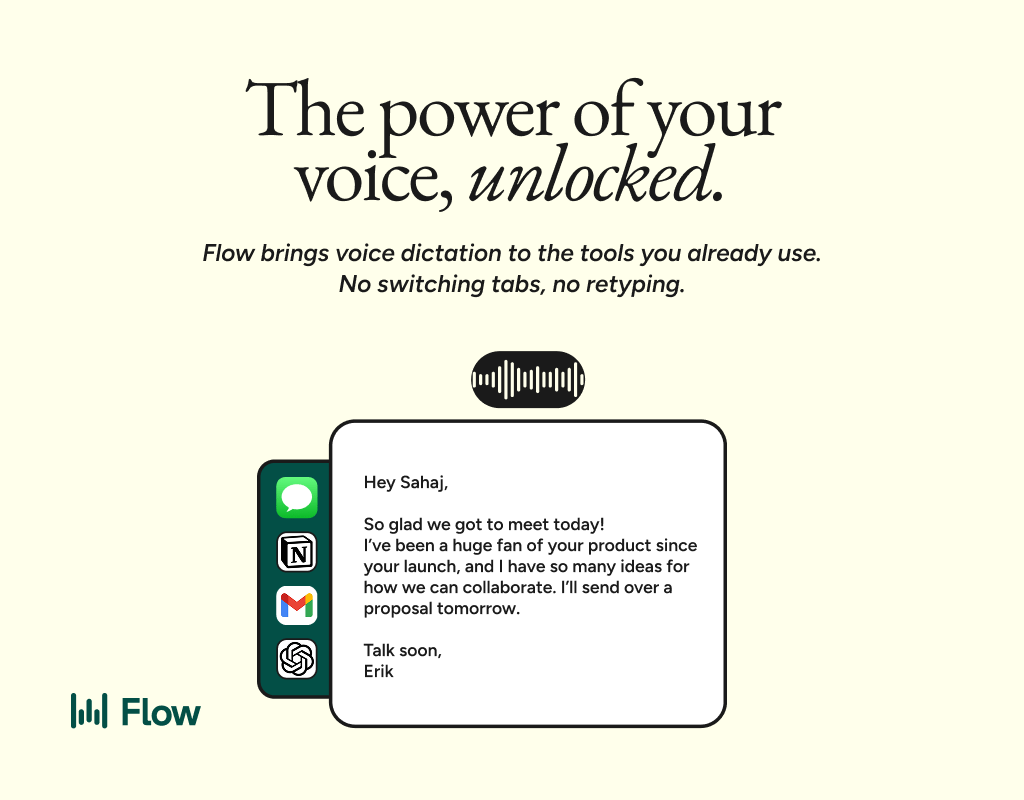

AI gets smarter when your input is complete. Wispr Flow helps you think out loud and capture full context by voice, then turns that speech into a clean, structured prompt you can paste into ChatGPT, Claude, or any assistant. No more chopping up thoughts into typed paragraphs. Preserve constraints, examples, edge cases, and tone by speaking them once. The result is faster iteration, more precise outputs, and less time re-prompting. Try Wispr Flow for AI or see a 30-second demo.

Hey there,

When learning about data availability in any capacity, be it via a college class you took all those years ago, or when learning about distributed systems, you inevitably come across the 3-2-1 backup rule, which states:

Keep three total copies of your data (original + two backups), store them on at least two different media types (e.g., disk and cloud), and keep at least one copy off-site (e.g., in the cloud or another location)

For the most part, this has always sounded like great advice, applicable in a good chunk of production use cases I had run into, but not all of them. This is important because I find that you do not really grasp some concepts until you run into certain use cases, for me, this was in my homelab.

Was this email forwarded to you? Subscribe here to get your weekly updates directly into your inbox.

The need for backups

We all know backups are important and why it is important to keep them up to date, well in my case, I ran into a situation where the drive I use for backups was corrupt, and I needed a separate copy of my data to restore my media server. Thankfully, I had a local copy and was able to restore using the one local copy I had, but it is not impossible to see a case where a drive fails, and a local copy is corrupted.

Making backups more resilient

I have a lot of media I care about, and losing the server becomes more costly the more data I acquire, so how do you factor in the 3-2-1 backup rule into your homelab? The answer was a lot simpler than I would have imagined.

In my case, I had two strong goals:

Keep a local copy of my configurations on disk for quick restores

Store an off-site copy somewhere reliable that would survive a hardware failure at home

The solution I landed on was Cloudflare R2.

Why R2?

R2 is Cloudflare's object storage service. It is S3-compatible, which means any tool that works with Amazon S3 will work with R2. The pricing is generous for hobbyists, and there are no egress fees, so pulling your backups down when you need them costs nothing extra.

My setup

I use Ansible to manage my homelab, so naturally, I wanted my backup configuration to live there too. The approach is straightforward:

A bash script compresses my configuration directory into a tarball

The script uploads that tarball to an R2 bucket using rclone

A cron job runs this every Saturday at midnight

The script excludes files I do not need in backups, like .git directories, node_modules, logs, and cache files. This keeps the backup small and focused on what actually matters.

When the backup runs, I end up with three copies of my data: the original on disk, a compressed tarball temporarily created during the backup process, and the final copy sitting safely in Cloudflare's infrastructure. The temporary tarball gets cleaned up after upload, but I could easily modify this to keep a local copy on an external drive if I wanted that extra layer of safety.

The Tooling: rclone

The tool that makes this possible is rclone. Think of it as rsync for cloud storage. It supports over 70 storage providers, including Amazon S3, Google Drive, Dropbox, and of course, Cloudflare R2.

Because R2 is S3-compatible, configuring rclone is simple. You provide your access key, secret key, and endpoint, then rclone treats your R2 bucket like any other remote destination. The same commands you would use for S3 work identically with R2.

Rclone handles the complexity of multipart uploads, retries on failure, and progress tracking. In my backup script, a single rclone copy command does all the heavy lifting.

Go from AI overwhelmed to AI savvy professional

AI will eliminate 300 million jobs in the next 5 years.

Yours doesn't have to be one of them.

Here's how to future-proof your career:

Join the Superhuman AI newsletter - read by 1M+ professionals

Learn AI skills in 3 mins a day

Become the AI expert on your team

Resources

If you want to set up something similar, here are the tools I used:

rclone - The Swiss army knife of cloud storage.

Start with their Cloudflare R2 configuration guide

Cloudflare R2 - Object storage with no egress fees. The free tier is generous for personal use.

Did you find this issue interesting? |

And it’s a wrap!

See you Friday for the week’s news, upcoming events, and opportunities.

If you found this helpful, share this link with a colleague or fellow DevOps engineer.

Jubril Oyentunji

Chief Technology Officer, EverythingDevOps